Big Data Theory and Computation

Organizer: Guang Cheng, Professor of Statistics, Department of Statistics, Purdue University

Chair: Shih-Kang Chao, Visiting Assistant Professor of Statistics, Department of Statistics, Purdue University

Speakers

- Shih-Kang Chao, Visiting Assistant Professor of Statistics, Department of Statistics, Purdue University

- Zijian Guo, Assistant Professor of Statistics, Department of Statistics and Biostatistics, Rutgers University

- Xiao Han, Postdoctoral Scholar, USC Marshall Statistics Group, University of Southern California

- Mingao Yuan, Ph.D. Candidate, Department of Mathematics, Indiana University Purdue University at Indianapolis (IUPUI)

Schedule

Wednesday, June 6, 1:30-3:30 p.m. in STEW 214 CD

| Time | Speaker | Title |

|---|---|---|

| 1:30 - 2:00 p.m. | Shih-Kang Chao |

Diffusion Approximation to Stochastic Mirror Descent with Statistical Applications |

|

Abstract: Stochastic gradient descent (SGD) is a popular algorithm that can handle extremely large data sets due to its low computational cost at each iteration and low memory requirement. Asymptotic distributional results of SGD are very well-known (Kushner and Yin, 2003). However, a major drawback of SGD is that it does not adapt well to the underlying structure of the solution, such as sparsity or constraints. Thus, many variations of SGD have been developed, and a lot of them are based on the concept of stochastic mirror descent (SMD). In this paper, we develop diffusion approximation to SMD with constant step size, using a theoretical tool termed "local Bregman divergence". In particular, we establish a novel continuous mapping theorem type result for a sequence of conjugates of the local Bregman divergence. The diffusion approximation results shed light on how to fine-tune an l1-norm based SMD algorithm to yield "asymptotically unbiased" estimator which zeros inactive coefficients. |

||

| 2:00-2:30 p.m. | Zijian Guo | Semi-supervised Inference for Explained Variance in High-dimensional Linear Regression and Its Applications |

|

Abstract: We consider statistical inference for the explained variance $\beta^{\intercal}\Sigma \beta$ under the high-dimensional linear model $Y=X\beta+\epsilon$ in the semi-supervised setting, where $\beta$ is the regression vector and $\Sigma$ is the design covariance matrix. A calibrated estimator, which efficiently integrates both labelled and unlabelled data, is proposed. It is shown that the estimator achieves the minimax optimal rate of convergence in the general semi-supervised framework. The optimality result characterizes how the unlabelled data affects the minimax optimal rate. Moreover, the limiting distribution for the proposed estimator is established and data-driven confidence intervals for the explained variance are constructed. We further develop a randomized calibration technique for statistical inference in the presence of weak signals and apply the obtained inference results to a range of important statistical problems, including signal detection and global testing, prediction accuracy evaluation, and confidence ball construction. The numerical performance of the proposed methodology is demonstrated in simulation studies and an analysis of estimating heritability for a yeast segregant data set with multiple traits. |

||

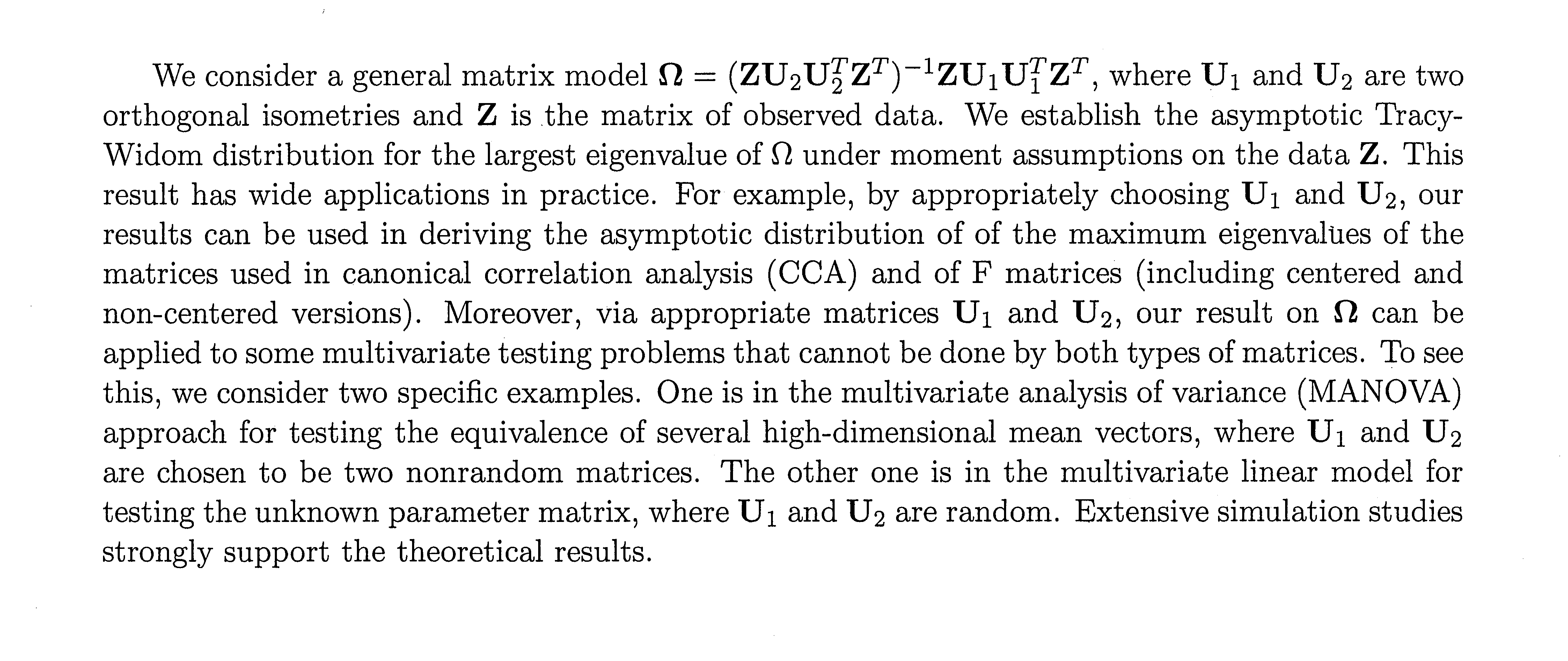

| 2:30-3:00 p.m. | Xiao Han | A unified matrix model: the largest eigenvalue and its applications |

|

||

| 3:00 - 3:30 p.m. | Mingao Yuan | Likelihood Ratio Test for Stochastic Block Models with Bounded Degrees |

|

Abstract: A fundamental problem in network data analysis is to test whether a network contains statistical significant communities. We study this problem in the stochastic block model context by testing H0: Erdos-Renyi model vs. H1: stochastic block model. This problem serves as the foundation for many other problems including the testing-based methods for determining the number of communities and community detection. Existing work has been focusing on growing-degree regime while leaving the bounded-degree case untreated. Here, we propose a likelihood ratio type procedure based on regularization to test stochastic block models with bounded degrees. We derive the limiting distributions as power Poisson laws under both null and alternative hypotheses, based on which the limiting power of the test is carefully analyzed. The joint impact of signal-to-noise ratio and the number of communities on the asymptotic results is also unveiled. The proposed procedures are examined by both simulated and real-world network datasets. Our proofs depend on the contiguity theory for random regular graphs developed by Janson (1995). |

||