What is Causal Inference? Why Do We Care? Can We Do It?¶

Causal inference refers to the design and analysis of data for uncovering causal relationships between treatment/intervention variables and outcome variables.

We care about causal inference because a large proportion of real-life questions of interest are questions of causality, not correlation.

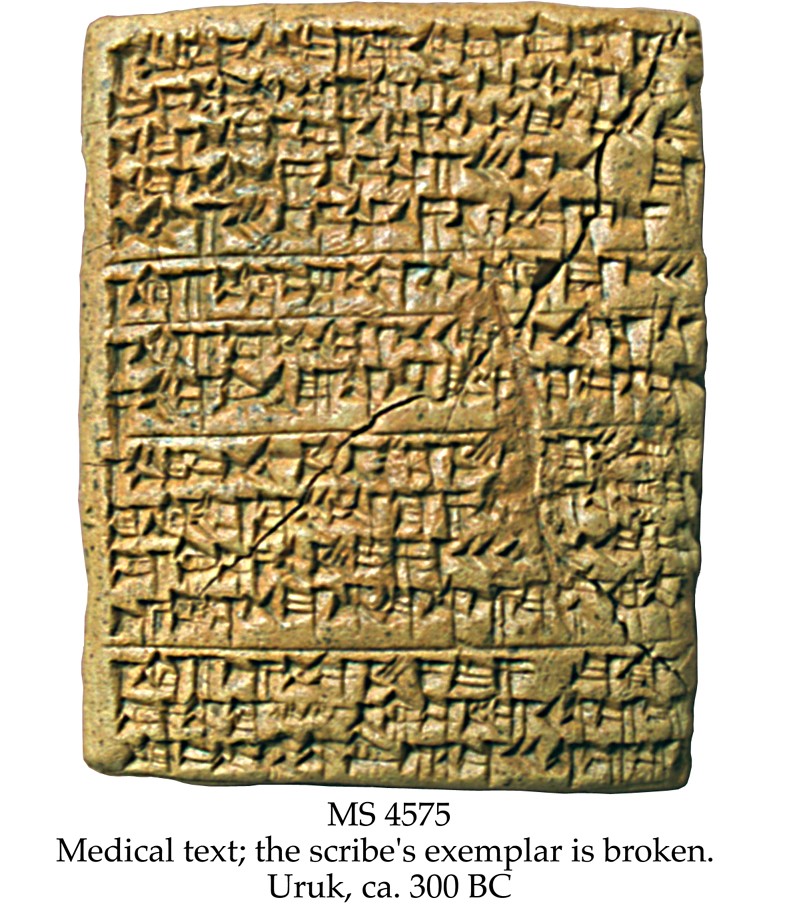

Causality has been of concern since the dawn of civilization.

From: Mesopotamiske farmakopéer, accessed September 26, 2014.

Rigorous statistical frameworks for causality that can be applied to many types of data have been established for only a century.

Example: The Causal Effect of Smoking¶

There is a wide consensus that smoking is bad for humans. But what is the basis for this consensus?

Experiments on smoking cannot be performed on humans due to medical ethics.

There exist significant observational data on human smoking, in which no controls are placed over how smoking is assigned to the subjects. What inferences do we obtain from such observational studies?

Cochran (1968: p. 297) gives three observational studies on smoking: a six-year Canadian study, a five-year British study, and a 20-month U.S.A. study.

The outcomes in these studies are summarized by the death rates per 1000 person-years, defined as

$$ \frac{\text{Total # of Deaths}}{\text{Total # of Person-Year Exposures}} \times 10^3. $$| Smoking Group | Canadian | British | U.S.A. |

|---|---|---|---|

| Non-smokers | 20.2 | 11.3 | 13.5 |

| Cigarettes only | 20.5 | 14.1 | 13.5 |

| Cigars and/or pipe | 35.5 | 20.7 | 17.4 |

🤔 From these studies, it appears that cigar/pipe smokers should instead just smoke cigarettes. Is that reasonable? What else can be lurking behind the scenes?

| Smoking Group | Canadian Mean Age | British Mean Age | U.S.A. Mean Age |

|---|---|---|---|

| Non-smokers | 54.9 | 49.1 | 57.0 |

| Cigarettes only | 50.5 | 49.8 | 53.2 |

| Cigars and/or pipe | 65.9 | 55.7 | 59.7 |

💡 Cigar/pipe smokers are older! That can explain (in part) their higher death rate.

💡 Cigarette smokers are fairly young in this dataset, and so likely did not develop any smoking-related diseases during the time period of their respective studies.

Confounding exists in these studies: cigar smokers are more likely to be older than other subjects, and hence more likely to die. Standard analyses cannot disentangle these two in the observational study!

Example: What Have We Learned?¶

Systematic differences in pre-treatment variables/covariates for subjects assigned different treatments confound inferences and introduce bias.

Confounding means a difference between the treatment groups, other than the treatments, related to the response (Freedman, 2009: p. 2).

Carefully designed experiments are the gold standard for causal inference because they can minimize bias from confounding (among other problems), and enable inferences with minimal assumptions.

Cochran (1965, 1968), Rubin (1973): Observational studies can be designed so as to reduce biases due to confounding, and yield valid causal inferences.

So Why Care About Causal Inference? And Can We Do It?¶

The typical goals in scientific investigations are to identify

- factors that affect a response, and

- details of these causal relationships.

Designed experiments are the gold standard for causal inference because the controls and physical act of randomization in experiments guarantee, in expectation, no bias due to confounding, and methods for statistical analyses that require no parametric assumptions (Imbens & Rubin, 2015).

Observational studies can yield valid causal inferences if they are designed prior to analysis, so as to approximate a randomized experiment.

Machine learning algorithms can enable powerful causal inferences in Big Data settings with many covariates and treatment effect heterogeneity.

Outline¶

The fundamentals of the Rubin Causal Model

Applications of machine learning algorithms for causal inference

Designing observational studies for valid causal inference

Part 1: The Fundamentals of the Rubin Causal Model¶

The Rubin Causal Model: Science, Learning, and Decisions¶

The Rubin Causal Model (RCM) is a rigorous statistical framework for drawing causal inferences from many types of studies.

Applications of the RCM involve three major steps.

- Science: Define experimental units, covariates, treatments, potential outcomes, estimands.

- Learning: Define assignment mechanism, inferential method.

- Decisions: Perform inference, decide what to do next.

☝ There exist other frameworks and models for causal inference. One prominent framework has been developed by Judea Pearl, and is described in Pearl (2009). Due to the focus of the presentations and demonstrations in this boot camp, we will just consider the RCM in this presentation. To learn more about other causal inference frameworks, be sure to attend Purdue's Department of Statistics Distinguished Theme Seminar Series in the fall 2021 semester!

Science¶

Before designing/analyzing an experiment/observational study, it is important to clearly define the Science of the problem.

Science: Define experimental units, covariates, treatments, potential outcomes, estimands.

Experimental unit: physical object(s) at a particular point in time.

An experimental unit assigned a treatment at a particular point in time could have been exposed to an alternative treatment at that same point in time.

Each experimental unit and treatment pair corresponds to a potential outcome.

| Unit | Control Potential Outcome | Treatment Potential Outcome |

|---|---|---|

| $i$ | $Y_i(0)$ | $Y_i(1)$ |

A unit-level causal effect is a comparison of potential outcomes for the same unit at the same point in time post-treatment.

One standard unit-level causal effect is simply $Y_i(1) - Y_i(0)$.

☝ A causal effect is not defined in terms of comparisons of outcomes at different times, as in before-after comparisons.

The Fundamental Problem of Causal Inference¶

| Unit | Control (0) or Treatment (1)? | $Y_i(0)$ | $Y_i(1)$ | $Y_i(1) - Y_i(0)$ |

|---|---|---|---|---|

| 1 | 1 | ? | ✔ | ? |

For an experimental unit, at most one of the potential outcomes can be realized and thus observed.

To learn about causal effects, we need

- multiple units, and

- knowledge of how the units were assigned treatments.

Several subtle issues must be considered in the presence of multiple units, and with an assignment mechanism.

Subtleties with Potential Outcomes for Multiple Units¶

We denoted the potential outcome for unit $i$ under treatment $t$ by $Y_i(t)$.

Causal effects were defined as comparisons of potential outcomes.

Both are ambiguous under two scenarios for the Science.

- Hidden varieties of treatment levels. To unambiguously define causal effects, explicitly recognize treatment variations in the Science.

- Interference among experimental units. Interference exists when the potential outcome for an experimental unit is not only a function of their treatment, but also of the treatments for other experimental units. In this case the causal effect for an unit is ambiguous because the actions of other units influence their potential outcomes.

Big Assumption for Causal Inference: SUTVA¶

The Stable Unit-Treatment Value Assumption (SUTVA, Imbens & Rubin, 2015: p. 10) consists of two conditions on the Science that yield unambiguous potential outcomes and causal effects.

- Well-defined potential outcomes: For each unit, there are no different forms or versions of each treatment level that lead to different potential outcomes.

- No interference: The potential outcomes for any unit do not vary with the treatments assigned to other units.

Experiments and observational studies are typically designed to ensure that SUTVA holds.

If SUTVA is violated, it is generally more difficult to describe and learn about the Science.

The Science table under SUTVA becomes more wieldy.

| Unit | $X_i$ | $Y_i(0)$ | $Y_i(1)$ |

|---|---|---|---|

| 1 | $X_1$ | $Y_1(0)$ | $Y_1(1)$ |

| 2 | $X_2$ | $Y_2(0)$ | $Y_2(1)$ |

| $\vdots$ | $\vdots$ | $\vdots$ | $\vdots$ |

| $N$ | $X_N$ | $Y_N(0)$ | $Y_N(1)$ |

Causal Estimands Under SUTVA¶

Finite-population causal estimand: Comparison of potential outcomes for one set of experimental units.

$$ \left \{ Y_i(\mathrm{1}) : i = 1, \ldots, N \right \} \ \mathrm{vs.} \ \left \{ Y_i(\mathrm{0}) : i = 1, \ldots, N \right \} $$The choice of estimand is typically governed by the question of interest for the Science.

For example, in the previous table, we can specify the following three estimands.

- Average Effect: $\tau = \bar{Y}(1) - \bar{Y}(0) = \frac{1}{N} \sum_{i=1}^N Y_i(1) - \frac{1}{N} \sum_{i=1}^N Y_i(0)$

- Median Unit-Level Effect: $\mathrm{Median} \left \{ Y_i(1) - Y_i(0) : i = 1, \ldots, N \right \}$

- Marginal Median Effect: $\mathrm{Median} \left \{ Y_i(1)\right \} - \mathrm{Median} \left \{ Y_i(0) \right \}$

The Important Role of the Assignment Mechanism¶

| Unit | $X_i$ | Control (0) or Treatment (1)? | $Y_i(0)$ | $Y_i(1)$ | $Y_i(1) - Y_i(0)$ |

|---|---|---|---|---|---|

| 1 | $X_1$ | 1 | ? | ✔ | ? |

| 2 | $X_2$ | 0 | ✔ | ? | ? |

| $\vdots$ | $\vdots$ | $\vdots$ | $\vdots$ | $\vdots$ | $\vdots$ |

| $N$ | $X_N$ | 1 | ? | ✔ | ? |

We never observe all potential outcomes or all unit-level effects.

Causal inference is a missing data problem, and a key role is played by the assignment mechanism.

Assignment mechanism: Description of how treatments are assigned to the experimental units (and the corresponding outcomes are observed).

Observed outcomes can possibly help us infer causal effects only when we account for how units came to receive their treatments.

☝ Potential outcomes and causal effects are well-defined regardless of the assignment mechanism.

Example: The Perfect Doctor (Imbens & Rubin, 2015: p. 14 - 15)¶

| Subject | Drug (0) or Surgery (1)? | $Y_i(0)$ | $Y_i(1)$ | $Y_i(1) - Y_i(0)$ |

|---|---|---|---|---|

| 1 | 1 | 7 | 6 | |

| 2 | 6 | 5 | -1 | |

| 3 | 1 | 5 | 4 | |

| 4 | 8 | 7 | -1 | |

| 5 | 2 | 3 | 1 | |

| 6 | 6 | 3 | -3 | |

| 4 | 5 | 1 |

On average, surgery increases survival time by 1 year.

$$ \bar{Y}(1) - \bar{Y}(0) = \frac{1}{6} \sum_{i=1}^6 Y_i(1) - \frac{1}{6} \sum_{i=1}^6 Y_i(0) = 1 $$Suppose that the doctor running this clinical trial was perfect: she only assigns those procedures to the subjects that give the maximum survival time.

In this case, the observed data would be:

| Subject | Drug (0) or Surgery (1)? | $Y_i(0)$ | $Y_i(1)$ | $Y_i(1) - Y_i(0)$ |

|---|---|---|---|---|

| 1 | 1 | ? | 7 | ? |

| 2 | 0 | 6 | ? | ? |

| 3 | 1 | ? | 5 | ? |

| 4 | 0 | 8 | ? | ? |

| 5 | 1 | ? | 3 | ? |

| 6 | 0 | 6 | ? | ? |

At first glance, the observed outcomes appear to suggest that surgery is worse than drug on average.

$$ \bar{y}^{\mathrm{obs}}(1) - \bar{y}^{\mathrm{obs}}(0) = -5/3 $$In general, $\bar{y}^{\mathrm{obs}}(1) - \bar{y}^{\mathrm{obs}}(0)$ would not be an appropriate estimator of $\bar{Y}(1) - \bar{Y}(0)$ if the assignment mechanism depends on the potential outcomes.

In this case, this estimator is ignoring the confounding from the Perfect Doctor's (lurking) judgments about assignment of surgery and drug to the subjects, and is accordingly biased.

An estimator in this setting should take into account the relationships between the potential outcomes that governed the Perfect Doctor's assignment mechanism.

| Subject | Drug (0) or Surgery (1)? | $Y_i(0)$ | $Y_i(1)$ | $Y_i(1) - Y_i(0)$ |

|---|---|---|---|---|

| 1 | 1 | $\leq 7$ | 7 | $\geq 0$ |

| 2 | 0 | 6 | $\leq 6$ | $\leq 0$ |

| 3 | 1 | $\leq 5$ | 5 | $\geq 0$ |

| 4 | 0 | 8 | $\leq 8$ | $\leq 0$ |

| 5 | 1 | $\leq 3$ | 3 | $\geq 0$ |

| 6 | 0 | 6 | $\leq 6$ | $\leq 0$ |

The Importance of the Assignment Mechanism¶

One cannot hope to obtain valid causal inferences from observed outcomes if one ignores the assignment mechanism.

Valid causal inference is possible only if one considers why the units received one treatment rather than another.

Knowledge of the assignment mechanism is also sufficient for certain causal inferences (Imbens & Rubin, 2015).

We proceed to introduce notation, definitions, and examples for assignment mechanisms.

Notation for Covariates and Potential Outcomes¶

Suppose we have $N$ units, indexed by $i$.

Covariates: Pre-treatment/background characteristics of the experimental units, or their data that are unaffected by treatment assignment.

$X_i$: $K$-component vector of covariates for unit $i$. Each $X_i \in \mathbb{R}^K$.

$\mathbf{X} = \begin{pmatrix} X_1' \\ X_2' \\ \vdots \\ X_N' \end{pmatrix}$: $N \times K$ matrix of covariates for all units.

$Y_i(0), Y_i(1)$: control and treatment potential outcomes for unit $i$.

$\mathbf{Y}(0) = \begin{pmatrix} Y_1(0) \\ Y_2(0) \\ \vdots \\ Y_N(0) \end{pmatrix}$: $N$-component vector of control potential outcomes for all units.

$\mathbf{Y}(1) = \begin{pmatrix} Y_1(1) \\ Y_2(1) \\ \vdots \\ Y_N(1) \end{pmatrix}$: $N$-component vector of treatment potential outcomes for all units.

☝ We assume SUTVA holds.

Notation for Treatment Assignment¶

$W_i$: treatment assignment indicator for unit $i$.

$$ W_i = \begin{cases} 1 & \mbox{if unit} \ i \ \mbox{is assigned treatment,} \\ 0 & \mbox{if unit} \ i \ \mbox{is assigned control}. \end{cases} $$$\mathbf{W} = \begin{pmatrix} W_1 \\ W_2 \\ \vdots \\ W_N \end{pmatrix}$: $N$-component vector of treatment assignment indicators for all units.

We shall typically denote the number of units assigned treatment and control as, respectively, $$ N_1 = \sum_{i=1}^N W_i, $$ $$ N_0 = \sum_{i=1}^N \left ( 1-W_i \right ). $$

Representation of Observed Outcomes¶

Observed outcomes are functions of potential outcomes and treatment assignment indicators.

$$ y_i^{\mathrm{obs}} = W_iY_i(1) + (1-W_i)Y_i(0) $$$$ y_i^{\mathrm{mis}} = (1-W_i)Y_i(1) + W_iY_i(0) $$☝ Potential outcomes and causal effects are well-defined regardless of treatment assignment indicators.

⚠ Do not confuse potential outcomes with observed outcomes!

Definition of the Assignment Mechanism¶

The assignment mechanism is a description of the probability of any vector of assignments for the entire population.

Assignment mechanism: $\mathrm{Pr}\{\mathbf{W} \mid \mathbf{X}, \mathbf{Y}(0), \mathbf{Y}(1) \}$.

☝ $\displaystyle \sum_{\mathbf{w} \in \{0,1\}^N} \mathrm{Pr}\{\mathbf{W} = \mathbf{w} \mid \mathbf{X}, \mathbf{Y}(0), \mathbf{Y}(1) \} = 1$ (Imbens & Rubin, 2015: p. 34).

Unit-level assignment probability for unit $i$: $p_i(\mathbf{X}, \mathbf{Y}(0), \mathbf{Y}(1)) = \displaystyle \sum_{\mathbf{w}: w_i = 1} \mathrm{Pr}\{\mathbf{W} = \mathbf{w} \mid \mathbf{X}, \mathbf{Y}(0), \mathbf{Y}(1) \}$.

Regularity Assumptions and the Propensity Score¶

Individualistic Assignment¶

There exists a function $q: \mathbb{R}^{K+2} \rightarrow (0,1)$ such that for all subjects $i$, $$ p_i(\mathbf{X}, \mathbf{Y}(0), \mathbf{Y}(1)) = q(X_i, Y_i(0), Y_i(1)) $$ and $$\mathrm{Pr} \{ \mathbf{W} \mid \mathbf{X}, \mathbf{Y}(0), \mathbf{Y}(1) \} = c \displaystyle \prod_{i=1}^N q(X_i, Y_i(0), Y_i(1))^{W_i}\{1-q(X_i,Y_i(0),Y_i(1))\}^{1-W_i} $$ for some set of $(\mathbf{W}, \mathbf{X}, \mathbf{Y}(0), \mathbf{Y}(1))$, where $c$ is the normalization constant for the probability mass function of the treatment assignment mechanism.

💡 If an assignment mechanism is not individualistic, then some subjects' treatment assignments would depend on the covariates and potential outcomes of other subjects, which would complicate the design and analysis of experiments or observational studies.

Probabilistic Assignment¶

For all subjects $i$, $0 < p_i(\mathbf{X}, \mathbf{Y}(0), \mathbf{Y}(1)) < 1$.

💡 A probabilistic assignment mechanism permits the consideration of all subjects for the design and analysis of an experiment or observational study, and reduces the risk of extrapolation biases when estimating treatment effects.

Unconfounded Assignment¶

For any $\mathbf{w} \in \{0, 1\}^N$ and potential outcome vectors $\mathbf{Y}(0), \mathbf{Y}'(0), \mathbf{Y}(1), \mathbf{Y}'(1) \in \mathbb{R}^N$, $$ \mathrm{Pr} \{ \mathbf{W} = \mathbf{w} \mid \mathbf{X}, \mathbf{Y}(0), \mathbf{Y}(1) \} = \mathrm{Pr} \{ \mathbf{W} = \mathbf{w} \mid \mathbf{X}, \mathbf{Y}'(0), \mathbf{Y}'(1) \}. $$

💡 No lurking confounders! The observed covariates contain all the information governing treatment assignment, and no additional variables associated with the outcomes are related to treatment assignment.

Regular assignment mechanism/Strongly ignorable treatment assignment: Combination of the individualistic, probabilistic, and unconfounded assignment assumptions.

💡 A regular assignment mechanism justifies designing an observational study so as to compare treated and control subjects with the same covariates for inferring causal effects.

If a treated and control subject have the same covariates, then their treatment assignments have effectively been performed according to some random mechanism.

Comparing treated and control subjects with the same covariates should thus yield unbiased inferences for the treatment effects in the designed observational study.

Propensity score for a regular assignment mechanism: Unit-level assignment probability, typically denoted by the function $e: \mathbb{R}^K \rightarrow (0,1)$.

Part 2: Applications of Machine Learning Algorithms for Causal Inference¶

Machine Learning for Causal Inference¶

| Experimental Unit $i$ | $\mathbf{X}_i$ | $W_i$ | $Y_{i}(0)$ | $Y_{i}(1)$ |

|---|---|---|---|---|

| $1$ | $\mathbf{X}_1$ | $W_1$ | $Y_{1}(0)$ | $Y_{1}(1)$ |

| $2$ | $\mathbf{X}_2$ | $W_2$ | $Y_{2}(0)$ | $Y_{2}(1)$ |

| $\vdots$ | $\vdots$ | $\vdots$ | $\vdots$ | $\vdots$ |

| $N$ | $\mathbf{X}_N$ | $W_N$ | $Y_{N}(0)$ | $Y_{N}(1)$ |

Machine learning is increasing in popularity for deriving causal inferences from experiments and (especially) observational studies.

Compared to more traditional statistical and regression approaches, machine learning enables the powerful incorporation of covariates for causal inference in the presence of complicated treatment effect heterogeneity.

One standard approach for deriving causal inferences via machine learning corresponds to specifying a model on the observed outcomes, with the predictors consisting of the treatment indicators and covariates.

In certain cases, inferences on the unknown parameters in the machine learning algorithm can be obtained, but without additional assumptions such inferences are technically correlational, not causal.

Consideration of the Rubin Causal Model helps to illuminate valid applications of machine learning algorithms for causal inferences.

💡 The RCM enables one to specify a consistent definition of a causal estimand, i.e., one that does not change depending on the method of analysis for the observed data. Accordingly, causal inferences obtained from different analytical approaches can be directly compared. Also, one does not have to specify a causal estimand in terms of parameters in a model or machine learning algorithm.

Example: Evaluating a Job Training Program (LaLonde, 1986) via Random Forests¶

Experimental Units

$445$ men in 1976.

Covariates

Age, years of education, ethnicity, etc.

Treatment Factor

Activity, with two levels: job training (1) or nothing (0).

Potential Outcome

Annual earnings in 1978 (in dollars).

Assignment Mechanism

CRD with $185$ treated units ($N_1 = 185, N_0 = 260$).

Question of Interest

What is the causal effect of the job training program, accounting for the subjects' covariates?

⚠ For the purpose of today's introductory presentation we give a simple, illustrative case study here. Dr. Rahman will discuss a more complicated dataset on July 14.

Lalonde_data = read.csv("Lalonde_data.csv", header=T)

Lalonde_data = Lalonde_data[,-c(1,14)]

Lalonde_data = Lalonde_data[,c(3:12,2,1)]

head(Lalonde_data)

Perspectives on the Use of Machine Learning for Causal Inference Under the Rubin Causal Model¶

| Unit | $\mathbf{X}_i$ | $W_i$ | $Y_i(0)$ | $Y_i(1)$ |

|---|---|---|---|---|

| 1 | $\mathbf{X}_1$ | 0 | ✔ | ? |

| 2 | $\mathbf{X}_2$ | 1 | ? | ✔ |

| $\vdots$ | $\vdots$ | $\vdots$ | $\vdots$ | $\vdots$ |

| N | $\mathbf{X}_N$ | 1 | ? | ✔ |

Super-population perspective (Imbens & Rubin, 2015: p. 113 - 114)

Experimental units are drawn from an infinite super-population of units.

Models are specified for the conditional mean of potential outcomes in the super-population.

The typical causal estimand is the average treatment effect, and can either be formulated in terms of a parameter in the machine learning algorithm (if possible), or can be formulated by means of average predictive comparisons (Gelman and Pardoe, 2007).

The validity of the models, in terms of whether they accurately describe the conditional mean, is immaterial for the large-sample unbiasedness of the estimator of the average treatment effect.

All results (bias, variance, etc.) are asymptotic (large sample) results.

Finite-population perspective

No assumptions for how the experimental units came to be enrolled into the study are necessary.

Machine learning algorithms are specified for the conditional distributions so as to impute missing potential outcomes and derive posterior distributions for causal estimands of interest.

Causal estimands are defined as comparisons of potential outcomes for the finite population of the experimental units in the study.

One is not limited to considering just average treatment effects as the causal estimands.

Causal estimands are separated from the potential outcome models induced by machine learning algorithms (so that the Science is separated from Learning).

The validity of the machine learning algorithm could be important for the consistency of the causal effect estimators, and should be diagnosed before drawing final conclusions.

The physical act of randomization in completely randomized designs can yield robustness to model misspecification (Garcia-Horton, 2015).

Finite-Population Perspective: Multiple Imputation of Missing Potential Outcomes¶

Certain machine learning algorithms can induce a type of probability model for the potential outcomes.

The set of potential outcomes $(Y_i(0), Y_i(1))$ is equivalent to the set of observed and missing potential outcomes $(y_i^{\mathrm{obs}}, y_i^{\mathrm{mis}})$, where

$$ y_i^{\mathrm{obs}} = W_iY_i(1) + (1-W_i)Y_i(0), $$$$ y_i^{\mathrm{mis}} = (1-W_i)Y_i(1) + W_iY_i(0). $$If a distribution $p \left (y_i^{\mathrm{mis}} \mid \mathbf{X}, \mathbf{y}^{\mathrm{obs}}, \mathbf{W} \right )$ can be specified based on the machine learning algorithm, then each $y_i^{\mathrm{mis}}$ can be imputed, and the value of the causal estimand under the imputation can be calculated.

The imputation is not perfect, as the predictions obtained from a machine learning algorithm are not perfect.

Multiple imputations of the missing potential outcomes can enable one to perform causal inferences that reflect uncertainty due to the assignment mechanism (i.e., due to the missingness in potential outcomes).

A key assumption for the valid application of multiple imputation is that the assignment mechanism is unconfounded (in addition to probabilistic and individualistic).

Addressing Controversies Over Causal Inference Based on Linear Regression¶

"Experiments should be analyzed as experiments, not observational studies" (Freedman, 2006: p. 691).

Implication: Analyze experiments as you designed them, in particular, by means of randomization-based inferences. Don't analyze experiments by regression models (and, by extension, machine learning algorithms) typically used in the analyses of observational studies.

Counterargument:

Experiments have unconfounded assignment mechanisms.

$\Rightarrow$ Potential outcomes in experiments are missing at random.

$\Rightarrow$ Imputation methods based on models can be used to impute missing potential outcomes and perform inferences on finite-population causal estimands (assuming the models are appropriate).

"... randomization does not justify the assumptions behind the ols [ordinary least squares] model" (Freedman, 2008: p. 181).

Counterargument:

Randomization corresponds to an unconfounded assignment mechanism, which justifies standard types of imputation methods.

If a model does not capture the relationships between the potential outcomes, treatments, and covariates, specify a better model.

- Machine learning algorithms could yield valid imputation methods, and be more flexible than standard linear regression models.

Bayesian Implementations of Multiple Imputation Methods: More to Come with Dr. Rao on July 15!¶

Under the finite-population perspective, the parameters $\boldsymbol{\theta}$ of a machine learning algorithm are effectively nuisance parameters, and should be integrated out.

$$ p \left (y_i^{\mathrm{mis}} \mid \mathbf{X}, \mathbf{y}^{\mathrm{obs}}, \mathbf{W} \right ) = \int p \left (y_i^{\mathrm{mis}} \mid \boldsymbol{\theta}, \mathbf{X}, \mathbf{y}^{\mathrm{obs}}, \mathbf{W} \right ) p \left ( \boldsymbol{\theta} \mid \mathbf{X}, \mathbf{y}^{\mathrm{obs}}, \mathbf{W} \right )d\theta $$Ideally, the integration of the nuisance model parameters should be performed under the Bayesian paradigm, so as to correctly propagate the uncertainties associated with the parameters.

A complication that could potentially arise under the Bayesian paradigm is deriving $p \left ( \boldsymbol{\theta} \mid \mathbf{X}, \mathbf{y}^{\mathrm{obs}}, \mathbf{W} \right )$.

If draws can be obtained from $p \left ( \boldsymbol{\theta} \mid \mathbf{X}, \mathbf{y}^{\mathrm{obs}}, \mathbf{W} \right )$, then draws can immediately be obtained from $p \left (y_i^{\mathrm{mis}} \mid \mathbf{X}, \mathbf{y}^{\mathrm{obs}}, \mathbf{W} \right )$ by virtue of the integral above.

☝ The $\int$ operation corresponds to both "summa" and "simulate"!

In this manner, implementing the multiple imputation approach under the Bayesian paradigm is conceptually straightforward.

Bootstrap Approximation to the Bayesian Implementation¶

The bootstrap (Efron, 1979) can be used to approximate the Bayesian approach for multiple imputation, i.e., to approximate the integration of unknown (nuisance) parameters.

In particular, we create a bootstrap distribution to approximate $p \left ( \boldsymbol{\theta} \mid \mathbf{X}, \mathbf{y}^{\mathrm{obs}}, \mathbf{W} \right )$ (Little and Rubin, 2002: p. 216 - 217).

Calculate the bootstrap distribution $\mathcal{B} \left (\boldsymbol{\theta} \mid \mathbf{X}, \mathbf{y}^{\mathrm{obs}}, \mathbf{W} \right )$.

For bootstrap sample $m = 1, \ldots, M$:

Draw $\tilde{\theta}^{(m)} \sim \mathcal{B} \left ( \boldsymbol{\theta} \mid \mathbf{X}, \mathbf{y}^{\mathrm{obs}}, \mathbf{W} \right )$.

Draw $\mathbf{y}^{\mathrm{mis}, (m)} \sim p \left ( \mathbf{y}^{\mathrm{mis}} \mid \mathbf{X}, \mathbf{y}^{\mathrm{obs}}, \mathbf{W}, \tilde{\theta}^{(m)} \right )$.

Calculate the causal estimand of interest based on the imputed dataset.

Derive causal inferences based on the set of drawn causal estimands.

Example: Analyzing the Job Training Program Experiment With A Random Forest Model for the Potential Outcomes¶

We use the randomForest package in R and the nonparametric bootstrap to infer the treatment effect in the job training program.

⚠ In the interest of time, and for the purpose of giving an illustrative case study, we are skipping several important steps associated with analyzing data via machine learning algorithms.

A more detailed treatment of causal inference via random forests is provided by Lu et al. (2018) and Künzel et al. (2019).

#install.packages("randomForest")

library(randomForest)

set.seed(1)

number_of_bootstrap_draws = 10^3

treatment_effect_estimates = rep(NA, number_of_bootstrap_draws)

Lalonde_data_treated = Lalonde_data[Lalonde_data$TREAT==1,]

Lalonde_data_control = Lalonde_data[Lalonde_data$TREAT==0,]

Lalonde_randomForest_treated = randomForest(x=Lalonde_data_treated[,1:10],

y=Lalonde_data_treated[,12])

Lalonde_randomForest_control = randomForest(x=Lalonde_data_control[,1:10],

y=Lalonde_data_control[,12])

treatment_effect_estimate = mean(c((Lalonde_data_treated[,12] -

predict(Lalonde_randomForest_control, Lalonde_data_treated[,1:10])),

(predict(Lalonde_randomForest_treated, Lalonde_data_control[,1:10]) -

Lalonde_data_control[,12])))

print(paste("The treatment effect estimate obtained from random forests is:", treatment_effect_estimate))

progress_bar = txtProgressBar(min=1, max=number_of_bootstrap_draws, style = 3)

for(i in 1:number_of_bootstrap_draws)

{

Lalonde_data_new_treated = Lalonde_data_treated[sample((1:nrow(Lalonde_data_treated)), replace=TRUE),]

Lalonde_randomForest_new_treated = randomForest(x=Lalonde_data_new_treated[,1:10],

y=Lalonde_data_new_treated[,12])

Lalonde_data_new_control = Lalonde_data_control[sample((1:nrow(Lalonde_data_control)), replace=TRUE),]

Lalonde_randomForest_new_control = randomForest(x=Lalonde_data_new_control[,1:10],

y=Lalonde_data_new_control[,12])

treatment_effect_estimates[i] = mean(c((Lalonde_data_new_treated[,12] -

predict(Lalonde_randomForest_new_control, Lalonde_data_new_treated[,1:10])),

(predict(Lalonde_randomForest_new_treated, Lalonde_data_new_control[,1:10]) -

Lalonde_data_new_control[,12])))

setTxtProgressBar(progress_bar, i)

}

close(progress_bar)

hist(treatment_effect_estimates, main="Bootstrap Distribution of Estimates of Causal Estimand", xlab="Treatment Effect")

mean(treatment_effect_estimates)

sd(treatment_effect_estimates)

quantile(treatment_effect_estimates, prob=c(0.025,0.25,0.5,0.75,0.975))

☝ The estimates of the unit-level causal effects are based on the observed and imputed potential outcomes.

Comparing the Results from the Random Forest Algorithm to the Standard Linear Model Inference¶

Lalonde_linear_model = lm(RE78 ~ MARR + NODEGREE + BLACK + HISPANIC + EDUC + AGE + RE74 + RE75 + U74 + U75 + TREAT,

data=Lalonde_data)

summary(Lalonde_linear_model)

#1.671e+03 + c(-1,1)*qt(0.975, df=433)*6.411e+02

Part 3: Designing Observational Studies for Valid Causal Inference¶

The Need to Design Observational Studies to Reduce Bias in Their Causal Inferences¶

An observational study involves an assignment mechanism whose functional form is unknown.

The analyses of observational studies are typically complicated by systematic differences in covariates across the different treatments.

Such differences can confound inferences and introduce bias.

Cochran (1965, 1968), Rubin (1973): Observational studies can be designed so as to reduce biases due to confounding, and yield valid causal inferences.

☝ Randomized experiments typically have the desirable feature that if sufficient care is taken in their design/randomization, then different statistical techniques will yield the correct answer, potentially with different levels of precision.

Example: Covariate Balance in the Job Training Program¶

#install.packages("vioplot")

library(vioplot)

options(warn=-1)

experiment_data = Lalonde_data

numerical_covariate_balance = function(treated, control, covariate_name)

{

mean_t = mean(treated)

mean_c = mean(control)

var_t = var(treated)

var_c = var(control)

pooled_var = (var_t*(length(treated)-1) + var_c*(length(control)-1))/(length(treated)+length(control)-2)

standard_err = (pooled_var*(1/length(treated) + 1/length(control)))^0.5

degrees_of_freedom = length(treated) + length(control) - 2

test_statistic = (mean_t - mean_c)/(standard_err)

p_value = 2*min(pt(test_statistic, df=degrees_of_freedom, lower.tail=TRUE),

pt(test_statistic, df=degrees_of_freedom, lower.tail=FALSE))

vioplot(treated, control, names=c("Treatment", "Control"), col="cyan", horizontal=TRUE, side="right")

print(paste(covariate_name,":", mean_t, ",", mean_c, ",", p_value))

}

categorical_covariate_balance = function(treated, control, covariate_name)

{

p_value = prop.test(x=c(sum(treated),sum(control)),

n=c(length(treated), length(control)),

alternative="two.sided", correct=TRUE)$p.value

print(paste(covariate_name,":", mean(treated), ",", mean(control), ",", p_value))

}

categorical_covariate_balance(experiment_data$MARR[experiment_data$TREAT==1],

experiment_data$MARR[experiment_data$TREAT==0],

"Marriage")

categorical_covariate_balance(experiment_data$NODEGREE[experiment_data$TREAT==1],

experiment_data$NODEGREE[experiment_data$TREAT==0],

"No High School Degree")

categorical_covariate_balance(experiment_data$BLACK[experiment_data$TREAT==1],

experiment_data$BLACK[experiment_data$TREAT==0],

"African-American")

categorical_covariate_balance(experiment_data$HISPANIC[experiment_data$TREAT==1],

experiment_data$HISPANIC[experiment_data$TREAT==0],

"Hispanic")

categorical_covariate_balance(experiment_data$U74[experiment_data$TREAT==1],

experiment_data$U74[experiment_data$TREAT==0],

"Unemployed in 1974")

categorical_covariate_balance(experiment_data$U75[experiment_data$TREAT==1],

experiment_data$U75[experiment_data$TREAT==0],

"Unemployed in 1975")

numerical_covariate_balance(experiment_data$EDUC[experiment_data$TREAT==1],

experiment_data$EDUC[experiment_data$TREAT==0],

"Education")

numerical_covariate_balance(experiment_data$AGE[experiment_data$TREAT==1],

experiment_data$AGE[experiment_data$TREAT==0],

"Age")

numerical_covariate_balance(experiment_data$RE74[experiment_data$TREAT==1],

experiment_data$RE74[experiment_data$TREAT==0],

"1974 Income")

numerical_covariate_balance(experiment_data$RE75[experiment_data$TREAT==1],

experiment_data$RE75[experiment_data$TREAT==0],

"1975 Income")

| Covariate | $\bar{X}_1$ | $\bar{X}_0$ | $p$-value |

|---|---|---|---|

| Marriage | $0.19$ | $0.15$ | $0.327$ |

| No Degree | $0.71$ | $0.83$ | $0.0014$ |

| Black | $0.84$ | $0.83$ | $0.649$ |

| Hispanic | $0.06$ | $0.11$ | $0.076$ |

| Years Education | $10.35$ | $10.09$ | $0.14$ |

| Age | $25.82$ | $25.05$ | $0.265$ |

| $1974$ Earnings | $2095.57$ | $2107.03$ | $0.98$ |

| $1975$ Earnings | $1532.07$ | $1266.91$ | $0.382$ |

| Unemployed $1974$ | $0.71$ | $0.75$ | $0.326$ |

| Unemployed $1975$ | $0.60$ | $0.68$ | $0.065$ |

Studies of LaLonde (1986) and Dehejia & Wahba (1999)¶

LaLonde (1986), and later Dehejia & Wahba (1999), considered what would happen if traditional statistical methodologies were used to study data from an observational study, not an experiment.

As we already know the "correct" answer for this job training program experiment, they constructed an observational study using just the treated units in this experiment, and investigated whether they would get the same "correct" answer from the observational study.

Specifically, suppose we had data on only the 185 treated units from the job training program, and did not know a great deal about how these units came to be treated.

To learn about the effect of the job training program on annual earnings, we form a control group by collecting data on 2490 units drawn from the Panel Study of Income Dynamics.

We collect the same covariates and response for all units.

🤔 Do we get the correct causal inferences for the effect of the job training program on annual earnings from this observational study?

Naive Machine Learning for the Observational Study¶

observational_data = read.table("Lalonde_observational_data.txt", header=T, sep="")

observational_data_treated = observational_data[observational_data$TREAT==1,]

observational_data_control = observational_data[observational_data$TREAT==0,]

observational_randomForest_treated = randomForest(x=observational_data_treated[,3:12],

y=observational_data_treated[,1])

observational_randomForest_control = randomForest(x=observational_data_control[,3:12],

y=observational_data_control[,1])

treatment_effect_estimate = mean(c((observational_data_treated[,1] -

predict(observational_randomForest_control, observational_data_treated[,3:12])),

(predict(observational_randomForest_treated, observational_data_control[,3:12]) -

observational_data_control[,1])))

print(paste("The treatment effect estimate obtained from random forests is:", treatment_effect_estimate))

number_of_bootstrap_draws = 10^3

treatment_effect_estimates = rep(NA, number_of_bootstrap_draws)

observational_data_treated = observational_data[observational_data$TREAT==1,]

observational_data_control = observational_data[observational_data$TREAT==0,]

progress_bar = txtProgressBar(min=1, max=number_of_bootstrap_draws, style = 3)

for(i in 1:number_of_bootstrap_draws)

{

observational_data_new_treated = observational_data_treated[sample((1:nrow(observational_data_treated)), replace=TRUE),]

observational_randomForest_new_treated = randomForest(x=observational_data_new_treated[,3:12],

y=observational_data_new_treated[,1])

observational_data_new_control = observational_data_control[sample((1:nrow(observational_data_control)), replace=TRUE),]

observational_randomForest_new_control = randomForest(x=observational_data_new_control[,3:12],

y=observational_data_new_control[,1])

treatment_effect_estimates[i] = mean(c((observational_data_new_treated[,1] -

predict(observational_randomForest_new_control,

observational_data_new_treated[,3:12])),

(predict(observational_randomForest_new_treated,

observational_data_new_control[,3:12]) -

observational_data_new_control[,1])))

setTxtProgressBar(progress_bar, i)

}

close(progress_bar)

hist(treatment_effect_estimates, main="Bootstrap Distribution of Estimates of Causal Estimand", xlab="Treatment Effect")

mean(treatment_effect_estimates)

sd(treatment_effect_estimates)

quantile(treatment_effect_estimates, prob=c(0.025,0.25,0.5,0.75,0.975))

Machine learning did not correct the bias introduced by imbalances in the covariates (Imbens & Rubin, 2015: p. 277)!

Covariate Balance in the Observational Study¶

categorical_covariate_balance(observational_data$MARR[observational_data$TREAT==1],

observational_data$MARR[observational_data$TREAT==0],

"Marriage")

categorical_covariate_balance(observational_data$NODEGREE[observational_data$TREAT==1],

observational_data$NODEGREE[observational_data$TREAT==0],

"No High School Degree")

categorical_covariate_balance(observational_data$BLACK[observational_data$TREAT==1],

observational_data$BLACK[observational_data$TREAT==0],

"African-American")

categorical_covariate_balance(observational_data$HISPANIC[observational_data$TREAT==1],

observational_data$HISPANIC[observational_data$TREAT==0],

"Hispanic")

categorical_covariate_balance(observational_data$U74[observational_data$TREAT==1],

observational_data$U74[observational_data$TREAT==0],

"Unemployed in 1974")

categorical_covariate_balance(observational_data$U75[observational_data$TREAT==1],

observational_data$U75[observational_data$TREAT==0],

"Unemployed in 1975")

numerical_covariate_balance(observational_data$EDUC[observational_data$TREAT==1],

observational_data$EDUC[observational_data$TREAT==0],

"Education")

numerical_covariate_balance(observational_data$AGE[observational_data$TREAT==1],

observational_data$AGE[observational_data$TREAT==0],

"Age")

numerical_covariate_balance(observational_data$RE74[observational_data$TREAT==1],

observational_data$RE74[observational_data$TREAT==0],

"1974 Income")

numerical_covariate_balance(observational_data$RE75[observational_data$TREAT==1],

observational_data$RE75[observational_data$TREAT==0],

"1975 Income")

| Covariate | $\bar{X}_1$ | $\bar{X}_0$ | $p$-value |

|---|---|---|---|

| Marriage | $0.19$ | $0.87$ | $\approx 0$ |

| No Degree | $0.71$ | $0.31$ | $\approx 0$ |

| Black | $0.84$ | $0.25$ | $\approx 0$ |

| Hispanic | $0.06$ | $0.03$ | $0.08$ |

| Years Education | $10.35$ | $12.1$ | $\approx 0$ |

| Age | $25.82$ | $34.85$ | $\approx 0$ |

| $1974$ Earnings | $2095.57$ | $19429$ | $\approx 0$ |

| $1975$ Earnings | $1532.07$ | $19063$ | $\approx 0$ |

| Unemployed $1974$ | $0.71$ | $0.09$ | $\approx 0$ |

| Unemployed $1975$ | $0.60$ | $0.1$ | $\approx 0$ |

Complication in the Observational Study: Covariate Imbalance¶

In general, treated and control units in an observational study will differ (sometimes immensely) in terms of their covariates.

Bias in estimation of the treatment effect will then be introduced by imbalances in covariates.

Lalonde (1986): Traditional observational studies are fundamentally flawed.

Intuitively, we need to discard control units who are not comparable to treated units, so as to compare "like with like" and remove such biases.

🤔 How do we determine the units to discard in the presence of many covariates?

The Propensity Score and Subclassification/Matching¶

Just as in designed experiments, the propensity score plays a big role in the design, and ultimately analysis, of an observational study.

The propensity score for a unit $i$ is defined as (excluding technicalities at this point) $$ e(X_i) = \mathrm{Pr}(W_i = 1 \mid X_i). $$

💡 An estimated propensity score serves as a one-dimensional summary of all covariates.

If propensity scores are constant across treatment and control groups, then the covariates are identically distributed across the groups (Imbens & Rubin, 2015: p. 266, 277).

Discarding units with extreme propensity scores, and further subclassifying/matching the remaining units with respect to their propensity scores, can reduce the bias in estimating causal effects from observational studies (Imbens & Rubin, 2015: Parts III, IV).

Initial Design of the Observational Study¶

Consider estimation of propensity scores based on a logistic regression with main effects for all covariates.

Control units with estimated propensity scores lower than the minimum of the treated units' estimated propensity scores, or larger than the maximum of the treated units' estimated propensity scores, are discarded.

These units are irrelevant in any analyses we wish to perform.

We want to infer the treatment effect on units who resemble those in the treatment group, and we do not intend to extrapolate.

After discarding these units, 1208 control units remain.

Covariate Balance Among Remaining Units¶

propensity_score_model = glm(TREAT ~ MARR + NODEGREE + BLACK + HISPANIC + EDUC + AGE + RE74 + RE75 + U74 + U75,

data=observational_data, family=binomial)

propensity_scores = propensity_score_model$fitted.values

hist(propensity_scores[1:185], main="Estimated Propensity Scores for Treated Units", xlab="Propensity Score")

hist(propensity_scores[186:2675], main="Estimated Propensity Scores for Control Units", xlab="Propensity Score")

min_treat_prop = min(propensity_scores[1:185])

max_treat_prop = max(propensity_scores[1:185])

data = cbind(observational_data, propensity_scores)

treated_units = data[1:185,]

new_control_units = data[data$TREAT==0 & data$propensity_scores>=min_treat_prop & data$propensity_scores<=max_treat_prop,]

new_data = rbind(treated_units, new_control_units)

hist(propensity_scores[1:185], main="Estimated Propensity Scores for Treated Units", xlab="Propensity Score")

hist(new_control_units[,14], main="Estimated Propensity Scores for Remaining Control Units", xlab="Propensity Score")

categorical_covariate_balance(new_data$MARR[new_data$TREAT==1],

new_data$MARR[new_data$TREAT==0],

"Marriage")

categorical_covariate_balance(new_data$NODEGREE[new_data$TREAT==1],

new_data$NODEGREE[new_data$TREAT==0],

"No High School Degree")

categorical_covariate_balance(new_data$BLACK[new_data$TREAT==1],

new_data$BLACK[new_data$TREAT==0],

"African-American")

categorical_covariate_balance(new_data$HISPANIC[new_data$TREAT==1],

new_data$HISPANIC[new_data$TREAT==0],

"Hispanic")

categorical_covariate_balance(new_data$U74[new_data$TREAT==1],

new_data$U74[new_data$TREAT==0],

"Unemployed in 1974")

categorical_covariate_balance(new_data$U75[new_data$TREAT==1],

new_data$U75[new_data$TREAT==0],

"Unemployed in 1975")

numerical_covariate_balance(new_data$EDUC[new_data$TREAT==1],

new_data$EDUC[new_data$TREAT==0],

"Education")

numerical_covariate_balance(new_data$AGE[new_data$TREAT==1],

new_data$AGE[new_data$TREAT==0],

"Age")

numerical_covariate_balance(new_data$RE74[new_data$TREAT==1],

new_data$RE74[new_data$TREAT==0],

"1974 Income")

numerical_covariate_balance(new_data$RE75[new_data$TREAT==1],

new_data$RE75[new_data$TREAT==0],

"1975 Income")

| Covariate | $\bar{X}_1$ | $\bar{X}_0$ | $p$-value |

|---|---|---|---|

| Marriage | $0.19$ | $0.78$ | $\approx 0$ |

| No Degree | $0.71$ | $0.41$ | $\approx 0$ |

| Black | $0.84$ | $0.43$ | $\approx 0$ |

| Hispanic | $0.06$ | $0.05$ | $0.66$ |

| Years Education | $10.35$ | $11.3$ | $\approx 0$ |

| Age | $25.82$ | $31.9$ | $\approx 0$ |

| $1974$ Earnings | $2095.57$ | $11051$ | $\approx 0$ |

| $1975$ Earnings | $1532.07$ | $936$ | $\approx 0$ |

| Unemployed $1974$ | $0.71$ | $0.17$ | $\approx 0$ |

| Unemployed $1975$ | $0.60$ | $0.2$ | $\approx 0$ |

Machine Learning for the Remaining Units¶

observational_data_treated = new_data[new_data$TREAT==1,]

observational_data_control = new_data[new_data$TREAT==0,]

observational_randomForest_treated = randomForest(x=observational_data_treated[,3:12],

y=observational_data_treated[,1])

observational_randomForest_control = randomForest(x=observational_data_control[,3:12],

y=observational_data_control[,1])

treatment_effect_estimate = mean(c((observational_data_treated[,1] -

predict(observational_randomForest_control, observational_data_treated[,3:12])),

(predict(observational_randomForest_treated, observational_data_control[,3:12]) -

observational_data_control[,1])))

print(paste("The treatment effect estimate obtained from random forests is:", treatment_effect_estimate))

number_of_bootstrap_draws = 10^3

treatment_effect_estimates = rep(NA, number_of_bootstrap_draws)

progress_bar = txtProgressBar(min=1, max=number_of_bootstrap_draws, style = 3)

for(i in 1:number_of_bootstrap_draws)

{

observational_data_new_treated = observational_data_treated[sample((1:nrow(observational_data_treated)), replace=TRUE),]

observational_randomForest_new_treated = randomForest(x=observational_data_new_treated[,3:12],

y=observational_data_new_treated[,1])

observational_data_new_control = observational_data_control[sample((1:nrow(observational_data_control)), replace=TRUE),]

observational_randomForest_new_control = randomForest(x=observational_data_new_control[,3:12],

y=observational_data_new_control[,1])

treatment_effect_estimates[i] = mean(c((observational_data_new_treated[,1] -

predict(observational_randomForest_new_control,

observational_data_new_treated[,3:12])),

(predict(observational_randomForest_new_treated,

observational_data_new_control[,3:12]) -

observational_data_new_control[,1])))

setTxtProgressBar(progress_bar, i)

}

close(progress_bar)

hist(treatment_effect_estimates, main="Bootstrap Distribution of Estimates of Causal Estimand", xlab="Treatment Effect")

mean(treatment_effect_estimates)

sd(treatment_effect_estimates)

quantile(treatment_effect_estimates, prob=c(0.025,0.25,0.5,0.75,0.975))

Subclassification on the Estimated Propenstiy Scores¶

Estimation of treatment effects through propensity score subclassification consists of the following broad steps.

Form subclasses of units with similar propensity scores.

Check that balance has been achieved on covariates.

Estimate treatment effects individually for each subclass.

Calculate the weighted average of treatment effect estimates across subclasses to estimate the treatment effect.

For example, consider subclasses defined by the quantiles (0,0.6,0.8,0.9,0.95,1) of the estimated propensity scores.

The number of treated units in each subclass are: 6, 10, 43, 62, 64.

The number of control units in each subclass are: 830, 268, 06, 8, 6.

Covariate Balance Across Subclasses¶

quantiles = quantile(new_data$propensity_scores, probs=c(0, 0.6, 0.8, 0.9, 0.95, 1))

subclass_1 = new_data[new_data$propensity_scores<=quantiles[2],]

subclass_2 = new_data[new_data$propensity_scores<=quantiles[3] & new_data$propensity_scores>quantiles[2],]

subclass_3 = new_data[new_data$propensity_scores<=quantiles[4] & new_data$propensity_scores>quantiles[3],]

subclass_4 = new_data[new_data$propensity_scores<=quantiles[5] & new_data$propensity_scores>quantiles[4],]

subclass_5 = new_data[new_data$propensity_scores>quantiles[5],]

number_treated_units = c(sum(subclass_1$TREAT==1),

sum(subclass_2$TREAT==1),

sum(subclass_3$TREAT==1),

sum(subclass_4$TREAT==1),

sum(subclass_5$TREAT==1))

number_control_units = c(sum(subclass_1$TREAT==0),

sum(subclass_2$TREAT==0),

sum(subclass_3$TREAT==0),

sum(subclass_4$TREAT==0),

sum(subclass_5$TREAT==0))

number_treated_units

number_control_units

subclass = rep(NA, 1393)

for(i in 1:1393)

{

if(new_data$propensity_scores[i]<=quantiles[2]) subclass[i] = 1

if(new_data$propensity_scores[i]<=quantiles[3] & new_data$propensity_scores[i]>quantiles[2]) subclass[i] = 2

if(new_data$propensity_scores[i]<=quantiles[4] & new_data$propensity_scores[i]>quantiles[3]) subclass[i] = 3

if(new_data$propensity_scores[i]<=quantiles[5] & new_data$propensity_scores[i]>quantiles[4]) subclass[i] = 4

if(new_data$propensity_scores[i]>quantiles[5]) subclass[i] = 5

}

subclassified_data = cbind(new_data, subclass)

covariate_balance = function(subclass)

{

subclass_t_MARR = mean(subclass$MARR[subclass$TREAT==1])

subclass_c_MARR = mean(subclass$MARR[subclass$TREAT==0])

subclass_t_NODEGREE = mean(subclass$NODEGREE[subclass$TREAT==1])

subclass_c_NODEGREE = mean(subclass$NODEGREE[subclass$TREAT==0])

subclass_t_BLACK = mean(subclass$BLACK[subclass$TREAT==1])

subclass_c_BLACK = mean(subclass$BLACK[subclass$TREAT==0])

subclass_t_HISPANIC = mean(subclass$HISPANIC[subclass$TREAT==1])

subclass_c_HISPANIC = mean(subclass$HISPANIC[subclass$TREAT==0])

subclass_t_EDUC = mean(subclass$EDUC[subclass$TREAT==1])

subclass_c_EDUC = mean(subclass$EDUC[subclass$TREAT==0])

subclass_t_AGE = mean(subclass$AGE[subclass$TREAT==1])

subclass_c_AGE = mean(subclass$AGE[subclass$TREAT==0])

subclass_t_RE74 = mean(subclass$RE74[subclass$TREAT==1])

subclass_c_RE74 = mean(subclass$RE74[subclass$TREAT==0])

subclass_t_RE75 = mean(subclass$RE75[subclass$TREAT==1])

subclass_c_RE75 = mean(subclass$RE75[subclass$TREAT==0])

subclass_t_U74 = mean(subclass$U74[subclass$TREAT==1])

subclass_c_U74 = mean(subclass$U74[subclass$TREAT==0])

subclass_t_U75 = mean(subclass$U75[subclass$TREAT==1])

subclass_c_U75 = mean(subclass$U75[subclass$TREAT==0])

subclass_t = c(subclass_t_MARR, subclass_t_NODEGREE, subclass_t_BLACK,

subclass_t_HISPANIC, subclass_t_EDUC, subclass_t_AGE,

subclass_t_RE74, subclass_t_RE75, subclass_t_U74, subclass_t_U75)

subclass_c = c(subclass_c_MARR, subclass_c_NODEGREE, subclass_c_BLACK,

subclass_c_HISPANIC, subclass_c_EDUC, subclass_c_AGE,

subclass_c_RE74, subclass_c_RE75, subclass_c_U74, subclass_c_U75)

print(cbind(c("Covariate",

"Marriage",

"No High School Degree",

"African-American",

"Hispanic",

"Education",

"Age",

"1974 Income",

"1975 Income",

"Unemployed in 1974",

"Unemployed in 1975"),

c("Treated", round(subclass_t,5)),

c("Control", round(subclass_c,5))))

}

covariate_balance(subclass_1)

covariate_balance(subclass_2)

covariate_balance(subclass_3)

covariate_balance(subclass_4)

covariate_balance(subclass_5)

weights = number_treated_units/(sum(number_treated_units))

Final Analysis for the Designed Observational Study¶

options(warn=-1)

number_of_bootstrap_draws = 10^3

observational_data = subclass_1

treatment_effect_estimates_1 = rep(NA, number_of_bootstrap_draws)

observational_data_treated = observational_data[observational_data$TREAT==1,]

observational_data_control = observational_data[observational_data$TREAT==0,]

progress_bar = txtProgressBar(min=1, max=number_of_bootstrap_draws, style = 3)

for(i in 1:number_of_bootstrap_draws)

{

observational_data_new_treated = observational_data_treated[sample((1:nrow(observational_data_treated)), replace=TRUE),]

observational_randomForest_new_treated = randomForest(x=observational_data_new_treated[,3:12],

y=observational_data_new_treated[,1])

observational_data_new_control = observational_data_control[sample((1:nrow(observational_data_control)), replace=TRUE),]

observational_randomForest_new_control = randomForest(x=observational_data_new_control[,3:12],

y=observational_data_new_control[,1])

treatment_effect_estimates_1[i] = mean(c((observational_data_new_treated[,1] -

predict(observational_randomForest_new_control,

observational_data_new_treated[,3:12])),

(predict(observational_randomForest_new_treated,

observational_data_new_control[,3:12]) -

observational_data_new_control[,1])))

setTxtProgressBar(progress_bar, i)

}

close(progress_bar)

observational_data = subclass_2

treatment_effect_estimates_2 = rep(NA, number_of_bootstrap_draws)

observational_data_treated = observational_data[observational_data$TREAT==1,]

observational_data_control = observational_data[observational_data$TREAT==0,]

progress_bar = txtProgressBar(min=1, max=number_of_bootstrap_draws, style = 3)

for(i in 1:number_of_bootstrap_draws)

{

observational_data_new_treated = observational_data_treated[sample((1:nrow(observational_data_treated)), replace=TRUE),]

observational_randomForest_new_treated = randomForest(x=observational_data_new_treated[,3:12],

y=observational_data_new_treated[,1])

observational_data_new_control = observational_data_control[sample((1:nrow(observational_data_control)), replace=TRUE),]

observational_randomForest_new_control = randomForest(x=observational_data_new_control[,3:12],

y=observational_data_new_control[,1])

treatment_effect_estimates_2[i] = mean(c((observational_data_new_treated[,1] -

predict(observational_randomForest_new_control,

observational_data_new_treated[,3:12])),

(predict(observational_randomForest_new_treated,

observational_data_new_control[,3:12]) -

observational_data_new_control[,1])))

setTxtProgressBar(progress_bar, i)

}

close(progress_bar)

observational_data = subclass_3

treatment_effect_estimates_3 = rep(NA, number_of_bootstrap_draws)

observational_data_treated = observational_data[observational_data$TREAT==1,]

observational_data_control = observational_data[observational_data$TREAT==0,]

progress_bar = txtProgressBar(min=1, max=number_of_bootstrap_draws, style = 3)

for(i in 1:number_of_bootstrap_draws)

{

observational_data_new_treated = observational_data_treated[sample((1:nrow(observational_data_treated)), replace=TRUE),]

observational_randomForest_new_treated = randomForest(x=observational_data_new_treated[,3:12],

y=observational_data_new_treated[,1])

observational_data_new_control = observational_data_control[sample((1:nrow(observational_data_control)), replace=TRUE),]

observational_randomForest_new_control = randomForest(x=observational_data_new_control[,3:12],

y=observational_data_new_control[,1])

treatment_effect_estimates_3[i] = mean(c((observational_data_new_treated[,1] -

predict(observational_randomForest_new_control,

observational_data_new_treated[,3:12])),

(predict(observational_randomForest_new_treated,

observational_data_new_control[,3:12]) -

observational_data_new_control[,1])))

setTxtProgressBar(progress_bar, i)

}

close(progress_bar)

observational_data = subclass_4

treatment_effect_estimates_4 = rep(NA, number_of_bootstrap_draws)

observational_data_treated = observational_data[observational_data$TREAT==1,]

observational_data_control = observational_data[observational_data$TREAT==0,]

progress_bar = txtProgressBar(min=1, max=number_of_bootstrap_draws, style = 3)

for(i in 1:number_of_bootstrap_draws)

{

observational_data_new_treated = observational_data_treated[sample((1:nrow(observational_data_treated)), replace=TRUE),]

observational_randomForest_new_treated = randomForest(x=observational_data_new_treated[,3:12],

y=observational_data_new_treated[,1])

observational_data_new_control = observational_data_control[sample((1:nrow(observational_data_control)), replace=TRUE),]

observational_randomForest_new_control = randomForest(x=observational_data_new_control[,3:12],

y=observational_data_new_control[,1])

treatment_effect_estimates_4[i] = mean(c((observational_data_new_treated[,1] -

predict(observational_randomForest_new_control,

observational_data_new_treated[,3:12])),

(predict(observational_randomForest_new_treated,

observational_data_new_control[,3:12]) -

observational_data_new_control[,1])))

setTxtProgressBar(progress_bar, i)

}

close(progress_bar)

observational_data = subclass_5

treatment_effect_estimates_5 = rep(NA, number_of_bootstrap_draws)

observational_data_treated = observational_data[observational_data$TREAT==1,]

observational_data_control = observational_data[observational_data$TREAT==0,]

progress_bar = txtProgressBar(min=1, max=number_of_bootstrap_draws, style = 3)

for(i in 1:number_of_bootstrap_draws)

{

observational_data_new_treated = observational_data_treated[sample((1:nrow(observational_data_treated)), replace=TRUE),]

observational_randomForest_new_treated = randomForest(x=observational_data_new_treated[,3:12],

y=observational_data_new_treated[,1])

observational_data_new_control = observational_data_control[sample((1:nrow(observational_data_control)), replace=TRUE),]

observational_randomForest_new_control = randomForest(x=observational_data_new_control[,3:12],

y=observational_data_new_control[,1])

treatment_effect_estimates_5[i] = mean(c((observational_data_new_treated[,1] -

predict(observational_randomForest_new_control,

observational_data_new_treated[,3:12])),

(predict(observational_randomForest_new_treated,

observational_data_new_control[,3:12]) -

observational_data_new_control[,1])))

setTxtProgressBar(progress_bar, i)

}

close(progress_bar)

overall_treatment_effect_estimates = weights%*%rbind(t(treatment_effect_estimates_1),

t(treatment_effect_estimates_2),

t(treatment_effect_estimates_3),

t(treatment_effect_estimates_4),

t(treatment_effect_estimates_5))

hist(overall_treatment_effect_estimates, main="Bootstrap Distribution of Estimates of Causal Estimand", xlab="Treatment Effect")

quantile(overall_treatment_effect_estimates, prob=c(0.025,0.25,0.5,0.75,0.975))

Analyzing Designed Observational Studies¶

There are four broad classes of strategies for causal inferences from designed observational studies (Imbens & Rubin, 2015: p. 270 - 276).

Model-based imputation.

Inverse-propensity score weighted estimators.

- This strategy is similar to Horvitz-Thompson estimation in survey sampling.

- Dividing by extreme probabilities can lead to sensitive estimators.

Blocking estimators that use the propensity score.

- Can incorporate covariate adjustment within blocks.

Matching estimators.

Combinations of these strategies can also be implemented in practice.

Subclassification with covariate adjustment within subclasses, and matching with covariate adjustment, are particularly attractive methods.

Supplement: Designing Observational Studies Based on Propensity Scores¶

Propensity Score and Their Theoretical Properties¶

Recall the three assumptions for a regular assignment mechanism.

Individualistic Assignment¶

There exists a function $q: \mathbb{R}^{K+2} \rightarrow (0,1)$ such that for all subjects $i$, $$ p_i(\mathbf{X}, \mathbf{Y}(0), \mathbf{Y}(1)) = q(X_i, Y_i(0), Y_i(1)) $$ and $$\mathrm{Pr} \{ \mathbf{W} \mid \mathbf{X}, \mathbf{Y}(0), \mathbf{Y}(1) \} = c \displaystyle \prod_{i=1}^N q(X_i, Y_i(0), Y_i(1))^{W_i}\{1-q(X_i,Y_i(0),Y_i(1))\}^{1-W_i} $$ for some set of $(\mathbf{W}, \mathbf{X}, \mathbf{Y}(0), \mathbf{Y}(1))$, where $c$ is the normalization constant for the probability mass function of the treatment assignment mechanism.

Probabilistic Assignment¶

For all subjects $i$, $0 < p_i(\mathbf{X}, \mathbf{Y}(0), \mathbf{Y}(1)) < 1$.

Unconfounded Assignment¶

For any $\mathbf{w} \in \{0, 1\}^N$ and potential outcome vectors $\mathbf{Y}(0), \mathbf{Y}'(0), \mathbf{Y}(1), \mathbf{Y}'(1) \in \mathbb{R}^N$, $$ \mathrm{Pr} \{ \mathbf{W} = \mathbf{w} \mid \mathbf{X}, \mathbf{Y}(0), \mathbf{Y}(1) \} = \mathrm{Pr} \{ \mathbf{W} = \mathbf{w} \mid \mathbf{X}, \mathbf{Y}'(0), \mathbf{Y}'(1) \}. $$

☝ Besides sequential experiments (such as multi-armed bandits), the individualistic assignment mechanism is not particularly controversial.

☝ If an experimental unit receives treatment with either probability zero or probability one, then estimates of the treatment effect for similar such experimental units will necessarily involve extrapolation, and so such units should not be considered for causal inferences. The probabilistic assignment mechanism is thus intuitive and justifiable.

☝ The unconfoundedness assumption is perhaps the most controversial assumption for causal inference on observational studies under the Rubin Causal Model. Having said that, it is commonly invoked across a wide range of domains (Imbens & Rubin, 2015: p. 262 - 263).

These regularity assumptions do not merely enable causal inferences for randomized experiments. They can also enable valid causal inferences for observational studies.

💡 If an (unknown) assignment mechanism is regular, then for that assignment mechanism we have that

a completely randomized design was effectively conducted for subpopulations of experimental units with the same covariates, and that

a causal interpretation can be attached to the comparison of observed outcomes for treated and control units within the subpopulations.

The second implication holds because the observed outcomes within a particular subpopulation constitute a random sample of the potential outcomes for that subpopulation, so that the difference in observed averages is unbiased for the subpopulation average treatment effect.

💡 The fact that the assignment mechanism is unknown does not matter for this result.

These desirable implications of a regular assignment mechanism can be operationalized for deriving valid, unbiased causal inferences from observational studies that have regular assignment mechanisms by means of the propensity scores $$ e(X_i) = \mathrm{Pr}(W_i = 1 \mid X_i). $$

Formal Derivations of the Properties of Propensity Scores¶

Treatment Assignment Does Not Depend on Covariates Given Propensity Scores¶

Claim : For a regular assignment mechanism, $\mathrm{Pr} \left \{ W_i = 1 \mid X_i, e(X_i) \right \} = \mathrm{Pr} \left \{ W_i = 1 \mid e(X_i) \right \}$.

Proof : As $e(X_i)$ is a function of $X_i$, $\mathrm{Pr} \left \{ W_i = 1 \mid X_i, e(X_i) \right \} = \mathrm{Pr} \left \{ W_i = 1 \mid X_i \right \} = e(X_i)$. Also, by ADAM's Law,

\begin{align} \mathrm{Pr} \left \{ W_i = 1 \mid e(X_i) \right \} &= \mathbb{E} \left \{ W_i \mid e(X_i) \right \} \\ &= \mathbb{E} \left \{ \mathbb{E} \left \{ W_i \mid X_i, e(X_i) \right \} \mid e(X_i) \right \} \\ &= \mathbb{E} \left \{ e(X_i) \mid e(X_i) \right \} \\ &= e(X_i). \end{align}We thus immediately have the result. ∎

💡 An estimated propensity score serves as a one-dimensional summary of all covariates, such that experimental units with the same propensity score values have similar covariates.

💡 If many covariates are considered for the design and analysis of an observational study (e.g., so as to ensure unconfoundedness), it can be sufficient to design the study based on the propensity score so as to yield balance on the covariates involved in the propensity score.

Unconfoundedness Given the Propensity Score¶

Claim : For an unconfounded treatment assignment mechanism, the treatment assignment is unconfounded given the propensity scores, i.e., $$ \mathrm{Pr} \left \{ W_i \mid Y_i(0), Y_i(1), e(X_i) \right \} = \mathrm{Pr} \left \{ W_i \mid e(X_i) \right \} = e(X_i). $$

Proof :

\begin{align} \mathrm{Pr} \left \{ W_i = 1 \mid Y_i(0), Y_i(1), e(X_i) \right \} &= \mathbb{E} \left \{ W_i \mid Y_i(0), Y_i(1), e(X_i) \right \} \\ &= \mathbb{E} \left \{ \mathbb{E} \left \{ W_i \mid Y_i(0), Y_i(1), X_i, e(X_i) \right \} \mid Y_i(0), Y_i(1), e(X_i) \right \} \\ &= \mathbb{E} \left \{ \mathbb{E} \left \{ W_i \mid X_i, e(X_i) \right \} \mid Y_i(0), Y_i(1), e(X_i) \right \} \\ &= e(X_i) \end{align}💡 Given a vector of covariates that ensure unconfoundedness, adjustment for treatment-control differences in propensity scores suffices for removing biases associated with the differences in covariates.

- Conditional on propensity score, treatment assignment is independent of the covariates. Even if a covariate is associated with the potential outcomes, differences in covariates between treated and control units do not lead to bias because they cancel out by averaging over all units with the same value for the propensity score.

☝ This situation is analogous to that of a completely randomized design.

The Propensity Score is the Coarsest Balancing Score¶

Claim : If $b(X_i)$ is a function of the covariates such that $\mathrm{Pr} \left \{ W_i = 1 \mid X_i, b(X_i) \right \} = \mathrm{Pr} \left \{ W_i = 1 \mid b(X_i) \right \}$ (i.e., $b(X_i)$ is a balancing score), then $e(X_i) = g(b(X_i))$ for some function $g$.

💡 The propensity score provides the biggest benefit in terms of reducing the number of variables we need to adjust for.

The Importance and Controversy of Unconfoundedness¶

The unconfoundeness assumption is critical to the utility of regular assignment mechanisms.

For an assignment mechanism to be unconfounded, we must have a sufficiently rich set of covariates such that adjusting for differences in their observed values between the treatment and control groups will remove systematic biases in the causal inferences.

The unconfoundness assumption is typically the most controversial assumption for causal inferences on observational studies under the Rubin Causal Model.

Furthermore, this assumption is not testable: the data are not directly informative about the distribution of $Y_i(0)$ for those units $i$ given treatment, nor are they directly informative about the distribution of $Y_j(1)$ for those units $j$ given control.

Our inability to test unconfoundedness in general is similar to our inability to test whether a missing data mechanism is missing not at random (MNAR) or missing at random (MAR).

As unconfoundedness is such an important and controversial assumption, supplementary analyses that can assess its plausibility can be important for drawing causal conclusions.

The Superpopulation Perspective in Observational Studies¶

The superpopulation perspective can be helpful for evaluating the frequentist properties of causal inference methods for observational studies with regular assignment mechanisms.

☝ In the previous derivations, covariates $X_i$ were considered to have been randomly drawn from a distribution.

The superpopulation perspective on unconfoundedness is also helpful for clarifying how the distributions of missing and observed potential outcomes are related.

💡 We have under unconfoundedness that for all $i = 1, \ldots, N$, $$ \left ( Y_i^{\mathrm{mis}} \mid W_i = w, X_i \right ) \sim \left ( Y_i^{\mathrm{obs}} \mid W_i = 1 - w, X_i \right), $$ so that as $Y_i^{\mathrm{mis}} = (1-W_i)Y_i(1) + W_iY_i(0)$, $Y_i^{\mathrm{obs}} = W_iY_i(1) + (1-W_i)Y_i(0)$, the distribution of a missing potential outcome equals the distribution of an observable outcome.

This is important because the data are not directly informative about the distribution of $Y_i^{\mathrm{mis}}$ unless we have an assumption such as unconfoundedness that connects this distribution to distributions that can be inferred from the data.

☝ Unconfoundedness is not a testable assumption. However, it does imply that one should compare "like with like", and thus it is well-motivated.

- If we did not have unconfoundedness, then we would have to stratify or match individuals with systematic differences in covariates (Imbens & Rubin, 2015: p. 264).

Although unconfoundedness is not testable, there do exist analyses that can be done to assess its validity.

- Estimate the treatment effect on an outcome known a priori not to be affected by treatment.

- Estimate the effect of a pseudo-treatment on the outcome.

- Assess the sensitivty of the estimates to the choice of covariates.

The Central Role of the Propensity Score¶

If unconfoundedness is valid for an observational study, then biases in the causal inferences can be removed by adjusting for differences in covariates.

Such adjustments can be difficult to perform if there are a large number of covariates.

The propensity score is effectively a low-dimensional summary of covariates that is sufficient for removing biases in causal inferences from observational studies in which treatment and control groups differ in terms of covariates.

☝ In practice, it may not be necessary to get precise inferences on propensity scores. Instead, we use propensity score models as devices for designing observational studies with good covariate balance.

Do not look at observed outcomes during the design of an observational study (Imbens & Rubin, 2015: p. 276).¶

Assess balance in covariate distributions between treated and control units.

- Standardized difference in average covariate values by treatment group.

- When treatment groups have important covariates that are more than $1/4$ or $1/2$ of a standard deviation apart, simple regression methods are unreliable for removing biases (Imbens & Rubin, 2015: p. 277).

- Distributions of the propensity scores.

- Standardized difference in average covariate values by treatment group.

Trimming using the propensity score.

- There are two different trimming approaches that are relevant for the two different estimands of the average treatment effect (ATE) and average treatment effect for the treated (ATT).

- For the ATE estimand, remove control units whose propensity scores are less than the minimum of the treated units' propensity scores, and remove treated units who propensity scores are greater than the maximum of the control units' propensity scores.

🤔 What could be an issue with this approach? - For the ATT estimand, follow the previous procedure.

- For more information, read the following article.

- For the ATE estimand, remove control units whose propensity scores are less than the minimum of the treated units' propensity scores, and remove treated units who propensity scores are greater than the maximum of the control units' propensity scores.

- There are two different trimming approaches that are relevant for the two different estimands of the average treatment effect (ATE) and average treatment effect for the treated (ATT).

Subclassification or matching.

- If you have a large pool of control units, consider matching. Otherwise, consider subclassification.

Covariate imbalances in observational studies can make causal inferences sensitive to changes in analysis methods (e.g., standard Neymanian inferences versus model-based inferences) and their specifications (e.g., covariates included in potential outcomes models).

In addition, covariate imbalances can make causal inferences imprecise.

Performing a design phase for an observational study can lead to robust and valid causal inferences for the sample of subjects included in the study.

For an observational study with a regular assignment mechanism, the estimated propensity scores can be useful for designing the observational study and obtaining valid, unbiased causal inferences.

The goal in estimating the propensity score is to design the observational study based on the estimates so as to obtain balanced covariate distributions between the treatment and control groups.

- We estimate the propensity score so as to group together experimental units with similar estimated propensity scores and obtain subgroups of treated and control units with similar covariate distributions.

The goal in estimating the propensity score is *not* to obtain the best estimate of the true propensity scores.

Sometimes estimated propensity scores are better than true propensity scores in terms of yielding designed observational studies with good covariate balance.

Typically, we are not interested in interpreting the propensity scores, although assessing the strength and nature of the dependence of treatment assignment on the covariates could be helpful for assessing the unconfoundedness assumption.

General Procedure for Estimating Propensity Scores and Designing Observational Studies¶

Specify an initial propensity score model that contains covariates thought a priori to be correlated with treatment assignment and/or the potential outcomes.

Assess the covariate balance for the designed observational study based on the previously specified and fitted propensity score model.

Return to Step 1 if the covariate balance in the designed observational study obtained via the previous propensity scores as unsatisfactory.

These steps can be performed as often necessary.

In all these steps, we do not look at the outcomes. This helps to prevent deliberately biasing the final causal inferences.

It is difficult to automate this procedure, for the same reason that it is difficult to automate experimental design.

- Imbens & Rubin (2015, p. 285 - 288) give one such procedure, but it should be viewed as an "initialization" of the general procedure above.

Subject-matter knowledge and domain experts are essential to the success of this procedure.

Don't blindly trust automated procedures. Explicitly check the balance of the covariates after designing the observational study.

Constructing Strata Based on Estimated Propensity Scores¶

The adequacy of a propensity score model specification can be assessed by means of the property that, for a regular assignment mechanism, $\mathrm{Pr} \left \{ W_i = 1 \mid X_i, e(X_i) \right \} = \mathrm{Pr} \left \{ W_i = 1 \mid e(X_i) \right \}$.

Specifically, using the estimates $\hat{e}(X_i)$ of the propensity scores, check whether the covariates are independent of the treatment group.

- This precisely corresponds to good covariate balance.

This assessment is done after constructing subclasses/strata/blocks of experimental units such that the units within a specific subclass have similar propensity scores (i.e., the propensity score estimates within a subclass have small variability).

If subclasses have small variability in the propensity score estimates, but poor covariate balance, then the propensity score model is most likely poorly specified.

Trimming the experimental units based on the propensity score estimates can be helpful, but be aware of how trimming could be connected to the choice of estimand (e.g., trimming procedures can differ depending on whether the ATE or ATT causal estimand is of interest (Imbens & Rubin, 2015: p. 313)).

Imbens & Rubin (2015, p. 293 - 294) provide an automated procedure for propensity score subclassification based on propensity score estimates.

- Automated procedures can be very useful for research on design methodologies for observational studies, as they facilitate the implementation of simulation studies. However, they may serve as only first steps for constructing an appropriate design in real-life observational studies.

Assessing Covariate Balance Based on Propensity Score Estimates¶

We are interested in comparing two multivariate covariate distributions: one for the treatment group, and the other for the control group.

In practice, we compare the centers and spreads/dispersions of the two distributions.